LiteLLM: The Ultimate AI Super-Tool That’s Changing Everything You Thought You Knew!

Estimated reading time: 12 minutes

Key Takeaways

-

- LiteLLM is an open-source library providing a **unified API** for over 100 Large Language Models (LLMs).

-

- It dramatically **simplifies AI development** by enabling easy switching between models without rewriting code.

-

- Key features include **streaming responses, robust fallbacks, error handling, logging, and cost tracking**.

-

- Its **Proxy Mode** offers centralized API key management, monitoring, and load balancing for teams.

- LiteLLM enhances **efficiency, scalability, and budget management** for AI projects.

Welcome, future innovators and AI adventurers! Prepare to have your minds blown, because something truly spectacular is making waves in the world of Artificial Intelligence this week. Imagine a secret key, a universal remote control that can command over a hundred powerful AI brains, making them all speak the same language. Sounds like something out of a futuristic movie, right? Well, it’s real, and it’s called **LiteLLM**.

This week, the buzz is all about **LiteLLM**, or Lightweight Large Language Model. If you’ve ever felt like the world of AI is a giant, complicated puzzle with too many pieces, then LiteLLM is the incredible guide that puts it all together. It’s an open-source library, which means it’s like a magical toolbox freely available to everyone, designed with one epic mission: to make working with super-smart Large Language Models (LLMs) as easy as playing your favorite video game. It offers a single, simple way to talk to over 100 different LLMs! That’s right, with LiteLLM, developers can switch between these powerful AI models without needing to completely rewrite their code every single time. It's like having a superpower that lets you choose any hero for your team with a snap of your fingers, all working perfectly together.

For years, the amazing world of Large Language Models has been growing faster than a speeding rocket. We’ve seen incredible AI tools emerge, each with its own special powers, like brilliant authors, super-fast problem solvers, or creative storytellers. But here's the tricky part: each of these AI brains often speaks a different language, requiring different instructions, different “buttons” to push, and different “menus” to navigate. For the clever folks who build AI applications, this has been a bit like trying to juggle a hundred different instruction manuals at once! It’s confusing, it takes a lot of time, and sometimes, it can be a real headache.

This is where **LiteLLM** bursts onto the scene like a superhero. It sees the complexity and says, “Not anymore!” LiteLLM creates one easy-to-understand system that acts as a universal translator and controller for all these different LLMs. Think of it like a master key that opens all the doors to the AI kingdom. Instead of learning a new password for every single AI you want to use, LiteLLM gives you one password, one set of rules, and suddenly, you have access to a whole universe of AI possibilities. This isn't just a small improvement; it's a giant leap forward in making AI more accessible, more fun, and incredibly powerful for everyone who uses it.

So, buckle up, because we’re about to dive deep into the fascinating world of LiteLLM, exploring its incredible features, how it works its magic, and why it’s set to revolutionize how we interact with the most advanced AI models on the planet!

LiteLLM: The Master Key to Over 100 AI Brains!

Imagine you have a giant toy box filled with the most amazing robots you could ever dream of. Each robot can do something spectacular – one can tell incredible stories, another can solve tricky math problems, and a third can help you write a fantastic essay. But here’s the catch: each robot has its own unique set of buttons and switches. To make the storyteller robot work, you press button A. To make the math robot work, you press button X. It gets confusing fast, right?

Now, imagine someone invents a universal remote control. This remote has just one set of buttons, and no matter which robot you point it at, those buttons work! You can make the storyteller robot tell a tale, then immediately switch to the math robot and solve a puzzle, all using the same simple remote. This universal remote is exactly what LiteLLM is for the world of Large Language Models (LLMs).

LiteLLM, or Lightweight Large Language Model, is like that super-smart universal remote control for AI. It’s an open-source library, which means it's a collection of tools and instructions freely shared with the world, designed to make talking to all sorts of LLMs incredibly simple. Instead of needing to learn a new, complicated way to speak to each different AI, LiteLLM gives you one straightforward way. This means developers – the clever people who build apps and programs – can use over 100 different LLMs with just one simple “language” or set of instructions. It’s a total game-changer because it means they can easily swap between different powerful AI models without having to change tons of code or spend ages learning new rules. This flexibility and ease of use are what make LiteLLM so incredibly exciting!

Think about it: if you want to build an AI app that sometimes needs a super-creative storyteller and other times needs a fact-checker, LiteLLM lets you do both effortlessly from the same starting point. This saves a lot of time and makes building amazing AI tools much, much faster and more fun.

The Superpowers of LiteLLM: Features That Will Amaze You!

LiteLLM isn't just about making things easy; it’s packed with incredible features that give developers powerful control and flexibility over their AI creations. Let’s explore these superpowers!

1. The Unified API: One Language for All AIs!

Have you ever tried to learn a new language? It can be tough! Now imagine learning over a hundred different languages just to talk to a hundred different AI brains. That's the problem LiteLLM solves with its **Unified API**.

What's an “API”? It's short for Application Programming Interface. Think of an API like a special menu you use to order food at a restaurant. If you go to a pizza place, you use their pizza menu. If you go to a burger place, you use their burger menu. In the world of AI, each powerful LLM used to have its own unique menu, or API. So, if you wanted to use an AI from Google, you'd use their menu. If you wanted to use an AI from OpenAI, you'd use their menu. This meant developers had to learn and remember many different “menus” and how to use them.

LiteLLM changes all that! It offers one “OpenAI-style API syntax” that works for almost all LLMs. The “OpenAI-style” is important because OpenAI's way of talking to AI is very popular and easy to understand. So, LiteLLM essentially says, “Let's all use this one simple menu, no matter which AI ‘restaurant' we're ordering from!”

This is an enormous leap forward. It means developers don't have to spend ages learning new rules for every single AI they want to use. They learn one simple set of instructions, and suddenly, they can access over 100 different powerful LLMs. This super-simplification boosts “efficiency,” meaning things get done faster and smarter, and provides amazing “flexibility,” allowing developers to experiment with different AIs easily to find the very best one for their project. It’s like having a universal remote that works for every single TV, game console, and media player in the world – all with the same simple buttons!

2. Streaming Responses: AI That Talks Back in Real-Time!

Imagine you're asking a super-smart AI a really long question, like “Tell me a story about a brave knight who saves a kingdom from a grumpy dragon.” If the AI had to think of the entire story, every single word, before it gave you any answer, you might be waiting for a while! That wouldn't be very exciting, would it?

This is where LiteLLM's **Streaming Responses** come in like a magical storyteller. “Streaming” means that the AI doesn't wait to finish its whole answer before it starts talking. Instead, it sends you its answer little by little, as it thinks of it. It's just like how you watch a video online – you don't have to download the whole movie before you start watching; it plays as it downloads!

For AI, this is incredibly useful, especially for “long completions” (when the AI is giving a very long answer) or “interactive applications” (like a chatbot where you're having a conversation). Developers can receive the AI's output “as tokens are generated“. What are “tokens”? Think of them as tiny building blocks of language, like individual words or even parts of words. So, as soon as the AI figures out a word, it sends it to you!

This means you get to see the AI's answer appear word by word, or sentence by sentence, right before your eyes. It makes the experience much more dynamic and exciting. If you’re building a chatbot, the user doesn't have to stare at a blank screen waiting for a reply; they see the AI “typing” its answer in real-time, just like a friend texting back! It makes AI feel more alive and responsive, creating a much better and smoother experience for anyone using the AI application.

3. Provider Switching and Fallbacks: Always On, Always Working!

What if your favorite AI suddenly decides to take a coffee break, or maybe it’s too busy helping someone else? In the past, this might have meant your AI application would stop working, which would be a big problem! But LiteLLM has a fantastic solution for this, like having a backup team ready to jump into action. This feature is called **Provider Switching and Fallbacks**.

First, let’s talk about “providers.” In the AI world, “providers” are simply the different companies or groups that create and offer these amazing LLMs. Think of them like different brands of delicious ice cream. LiteLLM makes it super easy to “switch between different LLM providers“. All you have to do is change a simple setting, like changing the “model parameter,” and suddenly you’re using a different AI brain. It's like deciding you want chocolate ice cream instead of vanilla, and it’s just one quick scoop away! This means developers can easily experiment with different AIs to find the one that performs best for their needs, or even use different AIs for different parts of their application.

But here’s where it gets really clever: “fallbacks.” Imagine you try to get chocolate ice cream, but the shop has run out! Instead of giving up, a smart shop might offer you vanilla as a “fallback.” LiteLLM does something similar for AI. It has “robust retry and fallback mechanisms“. This means if one AI model or “provider fails” – maybe it's too busy, or there's a tiny technical glitch – LiteLLM automatically tries again. If it still doesn't work, LiteLLM can automatically switch to a different, working AI model!

This ensures “service continuity”, which means your AI application keeps working smoothly without interruptions. It's like having a superhero team where if one hero is tired, another instantly takes their place to keep saving the day! This feature is incredibly important for creating reliable AI tools that users can always count on, making sure that your AI project is always running and ready to help, even if one part of the system has a hiccup.

4. Error Handling and Logging: The AI’s Detective Kit!

Even the smartest AI can sometimes get things wrong or bump into a problem. When that happens, it's really important to know what went wrong and why. This is where LiteLLM's **Error Handling and Logging** features come into play, acting like a super-smart detective kit for your AI projects.

First, let's talk about “error handling.” An “error” is just a problem or a mistake. Imagine you’re trying to build a tower with building blocks, and one block is missing. That’s an error! LiteLLM “standardizes error classes and structured responses“. What this means is that whenever an error happens, LiteLLM tries to describe the problem in a clear, consistent way. Instead of a confusing message like “Oops! Something broke!”, you might get a message like “The AI model is too busy right now, please try again later.” This “consistent exception management” makes it much easier for developers to understand what happened and how to fix it, like having a clear instruction manual for every possible problem.

Then there's “logging.” Think of “logging” as keeping a detailed diary or a trusty notebook where the AI writes down everything it does. Every time the AI gets a request, sends an answer, or encounters a problem, LiteLLM can record it. This “logging” feature is super helpful because it allows developers to “monitor usage” (see how often the AI is being used) and “debugging applications” (finding and fixing mistakes in their AI programs). If something weird happens, they can look back at the log to see exactly what led to the problem, just like a detective looking for clues!

LiteLLM also supports “cost tracking“. Using powerful AI models can sometimes cost money, depending on how much you use them. The cost tracking feature is like having a little accountant for your AI. It keeps track of how much each AI interaction costs. This helps developers and teams “monitor usage” and manage their money effectively, making sure they don't accidentally spend too much. It's an essential tool for keeping AI projects on budget and running smoothly, preventing any unexpected surprises!

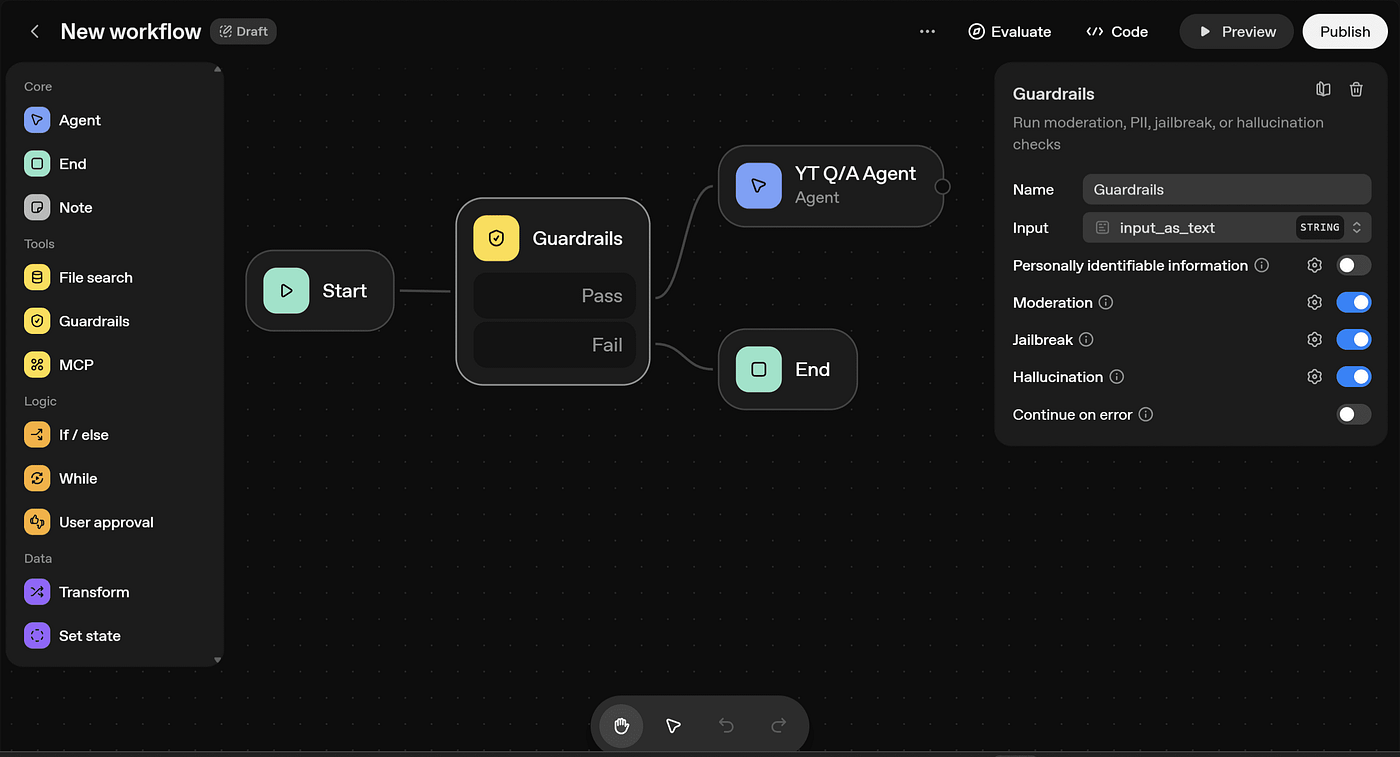

5. Proxy Mode: The Central Command Center for AI!

Imagine you have a huge team working on a very important project, and everyone needs to talk to different AI brains. If everyone on the team had to manage their own secret codes (called API keys) and keep track of how much they were using the AI, it could get messy really fast! That's where LiteLLM's **Proxy Mode** comes in, acting like a central command center or a super-efficient manager for all your AI interactions.

When LiteLLM runs in “proxy mode,” it acts like a special server – a computer that manages requests – that centralizes everything. Instead of each team member directly talking to the AI, they all talk to the LiteLLM proxy server first. The proxy server then forwards their requests to the right AI brain.

Why is this so cool?

- **Centralized Requests**: All requests go through one spot. This makes it easier to keep track of everything and ensures everyone is using the AI in the right way. It’s like all your letters going through one post office before being sent out.

- **Managing API Keys**: Those “API keys” are like secret passwords needed to access the AI. With proxy mode, the team can “manage API keys centrally“. This means only the proxy server needs to know the secret passwords, keeping them super safe and making it much easier to control who has access to what AI. No more sharing passwords individually!

- **Monitoring Usage**: Because all requests go through the proxy, it’s much easier to “monitor usage centrally“. This means a team leader can see exactly how much AI is being used by everyone, which AIs are most popular, and identify any issues quickly. It's like having a single dashboard that shows you everything happening with your AI project.

- **Complex Environments**: This feature is especially beneficial for big teams or “complex environments” where many people are working with many different LLMs. It brings order and simplicity to what could otherwise be a chaotic situation.

Think of it like a control tower at an airport. All planes (AI requests) talk to the control tower (LiteLLM proxy) before landing or taking off. The control tower keeps everything organized, safe, and moving smoothly, even when there are hundreds of planes in the sky! LiteLLM's proxy mode brings that same level of organization and control to managing AI resources, making it perfect for businesses and large projects.

How to Get Started with LiteLLM: Your First Steps into AI Magic!

Now that you know how amazing LiteLLM is, you’re probably wondering, “How do I actually use it?” The good news is, LiteLLM is designed to be user-friendly, offering two main ways to harness its power: through its Python SDK or as a Proxy Server.

LiteLLM Python SDK: Your Personal AI Toolbox

The **LiteLLM Python SDK** is like a personal toolbox for developers who are building new and exciting projects with LLMs. “SDK” stands for Software Development Kit – basically, a collection of tools, libraries, and guides that help you create software. If you're someone who likes to get your hands dirty with coding and build things directly, this is the way to go!

With the Python SDK, developers can “access multiple LLMs directly in Python code“. This means they write their computer programs in Python (a very popular and relatively easy-to-learn programming language), and LiteLLM provides all the special instructions needed to connect to and control various AI models right from that Python code. It’s perfect for individual developers or small teams creating custom AI applications. You get to interact with the AI directly, quickly, and powerfully.

LiteLLM Proxy Server: The AI’s Central Gateway

On the other hand, the **LiteLLM Proxy Server** is built for bigger teams, often called “AI enablement teams,” or for organizations that need to manage AI use across many different projects and users. Remember our “Proxy Mode” discussion? This is where it comes to life!

When LiteLLM runs as a proxy server, it acts as a “central gateway” to all the LLMs. Instead of each developer connecting individually to different AIs, everyone connects to this one central LiteLLM proxy server. This server then intelligently directs their requests to the correct AI model.

Why is this so great for teams?

- **Load Balancing**: Imagine a road with lots of cars. If all cars go down one lane, it gets congested. “Load balancing” is like having many lanes and directing cars to the least busy one. The proxy server can smartly send AI requests to the LLM that is least busy or most efficient, ensuring everyone gets a fast response.

- **Cost Tracking**: As we discussed, the proxy server can keep a central tally of how much AI is being used and how much it costs across all projects and users. This helps big teams manage their budgets much more effectively.

- **Customization**: Teams can set up special rules for “logging and caching per project“. “Caching” is like temporarily saving frequently used information so the AI can answer even faster next time. This means different projects can have different settings, making the AI system super flexible for diverse needs.

So, if you’re a lone wolf developer or part of a small, agile team, the Python SDK is likely your best friend. If you’re part of a larger organization needing centralized control, security, and cost management, the Proxy Server is the powerhouse you’ll want!

Getting LiteLLM Ready: Installation is a Breeze!

Getting LiteLLM onto your computer is incredibly simple, almost like downloading a new game. If you're working with Python (which is usually the case for developers using LiteLLM), you'll use a tool called `pip`. Think of `pip` as a special helper that finds and installs Python programs for you.

To install LiteLLM, you just need to open a special window on your computer called a “terminal” or “command prompt” and type this magic line:

pip install litellmOnce you press Enter, `pip` will whir into action, download LiteLLM, and get it all set up for you. It's usually a very quick process, and then you’re ready to start playing with your new AI universal remote!

A Glimpse of the Magic: Your First AI Conversation with LiteLLM!

Let's look at a quick example to see just how simple it is to use LiteLLM to talk to an AI. Don’t worry if the code looks a little complex at first glance; we’ll break it down into easy pieces.

Imagine you want to ask a powerful AI to “Say hello in Python.” Here’s how you would do it using LiteLLM:

from litellm import LiteLLM

client = LiteLLM()

response = client.completion(

model="openai/gpt-4o-mini",

messages=[{"role": "user", "content": "Say hello in Python"}]

)

print(response.choices[0].message.content)Let’s unravel this magic:

- `from litellm import LiteLLM`: This line is like saying, “Hey computer, I want to use the LiteLLM tool!” It brings LiteLLM into your program so you can start working with it.

- `client = LiteLLM()`: This creates a special helper, a “client,” that will be responsible for sending your messages to the AI and getting its answers back. Think of it as opening the LiteLLM app on your phone.

- `response = client.completion(…)`: This is the exciting part where you actually ask the AI a question! You're telling your LiteLLM helper to “complete” a thought or task for you.

- `model=”openai/gpt-4o-mini”`: This tells LiteLLM which specific AI brain you want to talk to. In this case, we're choosing a powerful, smaller version of OpenAI's GPT-4, called GPT-4o-mini. Remember how LiteLLM makes it easy to switch models? This is where you pick your AI!

- `messages=[{“role”: “user”, “content”: “Say hello in Python”}]`: This is your actual question or instruction for the AI. You're playing the “user,” and your “content” is “Say hello in Python.” It's like typing your question into a chat box.

- `print(response.choices[0].message.content)`: After the AI thinks and sends back its answer, this line tells your computer to “print” (display) the most important part of the AI's reply. The AI’s answer comes back in a special package, and this line helps you unwrap it to see the actual text.

If you run this code, the AI will think for a moment, and then your computer screen will likely show something like:

print("Hello")Isn't that cool? With just a few lines of code, you've used LiteLLM to connect to a super-smart AI and get it to do something for you! This simple example shows how LiteLLM keeps things clear and easy, letting you focus on what you want the AI to do, rather than getting bogged down in complicated setup.

The Amazing Benefits of LiteLLM: Why It’s a Must-Have!

LiteLLM isn't just a fancy new tool; it brings truly powerful advantages that are reshaping how developers and teams work with Artificial Intelligence. Let’s zoom in on the biggest benefits that make LiteLLM an absolute game-changer.

Simplified Development: Making AI Easier Than Ever Before!

Building apps with AI used to be like trying to build a complex Lego castle where every single brick came from a different, incompatible set. It was frustrating, time-consuming, and often required a lot of specialized knowledge just to get the pieces to fit together. LiteLLM sweeps away all that complexity and offers **Simplified Development**.

By giving everyone a “unified interface” – that single, universal language we talked about – LiteLLM dramatically “reduces the complexity of integrating multiple LLMs into projects“. What does this mean in plain English? It means developers don’t have to spend endless hours learning how to connect to each different AI. They don't have to write different chunks of code for OpenAI, then another for Google, and another for Anthropic. They learn one way, and it works for all of them!

This simplicity allows developers to focus their brilliant minds on the really fun stuff: creating new ideas, designing amazing user experiences, and solving real-world problems with AI. Instead of wrestling with technical differences, they can channel their energy into innovation. It’s like being given a superpower that lets you build things twice as fast with half the effort! This ease of use means more people can get into building with AI, leading to more creative and diverse applications that benefit us all.

Efficiency and Scalability: Growing Big, Staying Smooth!

In the world of technology, “efficiency” means getting things done quickly and well, without wasting time or resources. “Scalability” means being able to grow bigger and handle more users or more data without breaking down or becoming slow. LiteLLM is a champion at both, boosting **Efficiency and Scalability**.

Imagine you’re building a big online game. As more players join, your game needs to handle more action. If your system isn't scalable, it will crash! For AI applications, LiteLLM helps them grow strong and steady.

How does it do this?

- **Easy Model Switching**: Because it’s so simple to switch between different AI models, developers can quickly test which AI works best for a particular task or at a specific time. If one AI is slow or struggling, LiteLLM can easily shift the workload to another one, ensuring smooth operation.

- **Robust Fallbacks**: Remember the “fallback” feature? If one AI or provider temporarily fails, LiteLLM automatically has a backup ready to step in. This “ensures service continuity”, meaning your AI application rarely, if ever, goes offline. It keeps things running like a well-oiled machine, even when there are bumps in the road.

These features make AI projects much more reliable and robust. They can handle more users, more requests, and more complex tasks without skipping a beat. It’s like having a team of engineers constantly optimizing your car’s engine while you’re driving, ensuring it always runs at its peak performance and can go further than ever before!

Cost Tracking: Keeping Your AI Budget in Check!

Using powerful AI models, especially for big projects, can sometimes come with a price tag. It’s important to know how much you’re spending so you can manage your resources wisely, just like you’d keep track of how much candy you buy! LiteLLM has a special superpower for this: **Cost Tracking**.

LiteLLM “supports cost tracking and budgeting“. This means it can keep a detailed record of how much each interaction with an AI model costs. Why is this so useful?

- **Smart Spending**: Developers and teams can see exactly where their money is going. If one AI model is very expensive for a certain task, they might choose to use a different, more affordable model for that job.

- **Budgeting**: For big projects or companies, setting a budget for AI use is crucial. LiteLLM’s cost tracking helps “manage resource usage effectively“. It’s like having a little accountant who tells you how much you've spent on AI so far, helping you stay within your budget.

This feature is incredibly valuable for making sure AI projects are not only powerful and efficient but also financially smart. It empowers teams to make informed decisions about which AIs to use and how to use them, preventing any surprises when the bill arrives. It helps ensure that the amazing power of AI is accessible and sustainable for everyone, from small startups to large corporations.

LiteLLM: The Future is Here, and It's Seamless!

What an incredible journey we’ve taken into the heart of **LiteLLM**! From understanding its core mission to simplifying interactions with over 100 Large Language Models, to marveling at its powerful features like the Unified API, real-time streaming, smart fallbacks, detailed logging, and the mighty Proxy Mode, it’s clear that LiteLLM is not just another tool – it’s a revolutionary leap forward.

LiteLLM is truly a **powerful tool for developers working with LLMs**, offering a “streamlined approach to model integration and management“. It takes the complex, fragmented world of AI and transforms it into an organized, efficient, and exciting playground. For those building the next generation of AI applications, LiteLLM is the key to unlocking unprecedented speed, flexibility, and control.

Imagine a world where the incredible potential of Artificial Intelligence is no longer hidden behind complicated instructions and endless technical hurdles. LiteLLM is bringing that world to life, making it easier than ever for brilliant minds to create, innovate, and solve challenges that once seemed impossible.

So, as we look to the future, remember the name **LiteLLM**. It’s more than just a library; it’s a beacon of simplicity and power in the rapidly evolving landscape of AI. It’s enabling a new era where creating world-changing AI applications is not just for a select few, but for anyone ready to dream big and build bigger. The future of AI is here, and thanks to LiteLLM, it’s going to be incredibly exciting, wonderfully efficient, and remarkably seamless! Get ready to build your own AI magic!

Frequently Asked Questions

What is LiteLLM?

LiteLLM (Lightweight Large Language Model) is an open-source Python library that provides a unified API to interact with over 100 different Large Language Models (LLMs) from various providers, making AI development simpler and more efficient.

How does LiteLLM simplify AI development?

It offers a single, OpenAI-style API syntax that works across many LLMs, eliminating the need for developers to learn different interfaces. This streamlines integration and reduces development time, allowing focus on innovation.

What is the “Proxy Mode” in LiteLLM?

Proxy Mode allows LiteLLM to act as a central server for all AI requests. It helps in centralized API key management, usage monitoring, load balancing, and cost tracking, making it especially beneficial for larger teams and complex projects.

Can LiteLLM help manage costs for AI usage?

Yes, LiteLLM includes a cost tracking feature that monitors how much each AI interaction costs. This helps developers and teams manage their budgets effectively, make informed decisions about model usage, and prevent unexpected expenses.

How do I get started with LiteLLM?

You can install LiteLLM easily using `pip install litellm` in your terminal. Developers can then use its Python SDK for direct integration into their code, or deploy it as a Proxy Server for centralized team-wide access and management.