What is the Primary Function of the Perception Part of an Agentic AI Loop?

Estimated reading time: 12 minutes

Key Takeaways

- The primary function of AI perception is to sense, collect, and interpret data from the environment, transforming raw input into actionable understanding.

- This involves crucial steps like data gathering, initial processing & interpretation, and contextualization.

- Key technologies enabling AI perception include Computer Vision, Natural Language Processing (NLP), Sensor Fusion, and Optical Character Recognition (OCR).

- Perception is the indispensable first stage in the Agentic AI loop, enabling all subsequent reasoning and action.

- Accuracy in perception is critical; without it, agentic AI cannot operate autonomously or adaptively in dynamic, real-world settings.

Table of Contents

- Unlocking the AI's Eyes and Ears: What is the Primary Function of the Perception Part of an Agentic AI Loop?

- Key Takeaways

- The Amazing World of Agentic AI: A Quick Peek

- Unveiling the AI's Super Senses: What is the Primary Function of the Perception Part of an Agentic AI Loop?

- The AI's Super Powers: Key Aspects of Perception

- Perception in Action: How it Fits into the Agentic AI Loop

- Why Perception is the Heartbeat of Agentic AI: The Foundation of Understanding

- The Thrilling Future of AI Perception

- Conclusion: The Unseen Hero of Intelligent AI

- Frequently Asked Questions

Imagine a world where robots don't just follow simple commands, but truly understand what's happening around them. A world where intelligent computer programs can sense, learn, and then act almost like a person! It sounds like something out of a futuristic movie, right? But believe it or not, this incredible technology is being built right now, piece by astonishing piece. At the heart of these smart AI systems is something called an “Agentic AI Loop,” and one part of this loop is like the AI's very own set of super senses. Today, we're diving deep into an exciting question that's buzzing through the world of artificial intelligence: what is the primary function of the perception part of an agentic AI loop?

Get ready to uncover the secrets behind how AI “sees,” “hears,” and “feels” the digital world, transforming raw information into smart decisions. This is where the magic begins, where an AI moves beyond just following instructions to truly understanding its environment!

The Amazing World of Agentic AI: A Quick Peek

Before we zoom in on perception, let's quickly understand what an Agentic AI Loop is. Think of an agentic AI as a super-smart digital helper that can think for itself to reach a goal. It doesn't just wait for you to tell it every single little thing to do. Instead, it works in a cycle, like a continuous circle of understanding and acting. This circle is often called the “Perceive-Reason-Act” cycle.

- Perceive: This is where the AI takes in information from its surroundings, like a detective gathering clues.

- Reason: Then, it thinks about what those clues mean, figuring out the best plan.

- Act: Finally, it performs an action based on its plan, trying to achieve its goal.

And guess what? After it acts, it perceives again, seeing how its action changed the world, and the loop continues! It's an endless journey of learning and doing. But it all starts with that crucial first step: Perception.

Unveiling the AI's Super Senses: What is the Primary Function of the Perception Part of an Agentic AI Loop?

Hold onto your hats, because here comes the big reveal! The primary function of the perception part of an agentic AI loop is to sense, collect, and interpret data from the environment, transforming raw input into an actionable understanding that can inform reasoning and decision-making processes (source: IBM Think – source: IBM on AI agent perception[13]).

Let's break that down, because it's a mighty important job!

Imagine you're playing a game of catch. Your eyes sense the ball flying towards you. Your brain collects all the details – its speed, its spin, its path. Then, your brain interprets that data, understanding that “a ball is coming, I need to put my hands up to catch it.” This whole process, from seeing to understanding, is like the perception part for an AI. It's how the AI takes in information from its surroundings – like a super-smart explorer – and turns that jumble of raw facts into something it can truly use to make smart choices.

Without this incredible ability to perceive, an agentic AI would be completely lost. It would be like trying to play catch in the dark, or trying to understand a story told in a language you don't speak. The perception part is literally the AI's window to the world, its ability to “see” and “hear” and “read” everything around it, so it can then “think” and “do” amazing things. It's the very first, and arguably the most foundational, step in building truly intelligent and helpful AI systems that can adapt and work in our complex, ever-changing world. This is not just about observing; it's about actively gathering, sifting through, and making sense of a massive flood of information, making it ready for the AI's “brain” to process.

Think of it like this: If the AI is a master chef, then perception is the part that goes to the market, gathers all the freshest ingredients, checks their quality, and prepares them perfectly for cooking. Without great ingredients, even the best chef can't make a world-class meal. And without precise perception, even the smartest AI can't make world-class decisions. This initial stage is truly a marvel, enabling AI to move from being a simple tool to a truly responsive and adaptive entity in its environment.

The AI's Super Powers: Key Aspects of Perception

So, how does an AI actually “perceive” the world? It's not just one simple trick! There are several key aspects, each like a different superpower, that help the perception part of an agentic AI loop do its amazing work.

1. Data Gathering: The AI's Information Collection Agency

This is where the AI literally casts its net wide to pull in information. Data gathering is all about acquiring real-time information from various sources (source: AWS Prescriptive Guidance – source: IBM Think – source: IBM on AI agent perception[13]). Think of a real-life spy agency collecting intelligence from all over the world, but much, much faster and more precisely!

What kinds of “sources” are we talking about? This is where it gets really exciting:

- Sensors: Imagine a robot exploring Mars. It has special “eyes” (cameras) to see the rocks, “noses” to detect chemicals, and “feelers” to measure temperature. These are sensors! In the AI world, sensors can be anything from actual physical sensors on a robot to digital sensors that detect changes in a computer system. They are constantly feeding the AI fresh data, like tiny digital messengers.

- APIs (Application Programming Interfaces): This sounds complicated, but think of it as a special digital door. When one computer program needs information from another program, it uses an API to “ask” for it. For example, an AI might use an API to ask a weather website for the current temperature, or a traffic app for the best route. It's like having a direct phone line to other information providers!

- Databases: Imagine a giant library filled with millions of facts and figures. That's a database! An AI can quickly search these databases to find important information it needs, like customer names, product prices, or historical data patterns. It's a massive memory bank the AI can tap into instantly.

- Cameras: These are the AI's eyes! Cameras allow an AI to “see” images and videos. Think of self-driving cars that use cameras to see other cars, traffic lights, and pedestrians. Or an AI that can watch a video and tell you what's happening in it. It's giving AI the power of sight, allowing it to observe the visual world around it in incredible detail.

- Microphones: These are the AI's ears! Microphones allow an AI to “hear” sounds and human speech. This is how voice assistants like Siri or Alexa understand your commands. An AI could also listen for alarms, identify different voices, or even detect changes in machinery by the sounds they make. It's giving AI the power of hearing, letting it process the auditory information of its environment.

- User Interactions: This is about how humans communicate with the AI. If you type a question into a chatbot, that's user interaction. If you click a button on a website, that's also information the AI can perceive. The AI learns from what you do and say, constantly gathering insights into your needs and intentions.

This massive collection of data, coming in real-time from so many different places, is the raw material. It's like gathering all the individual pieces of a giant jigsaw puzzle. The more diverse and accurate the data gathering, the richer and more complete the AI's understanding of its world will be. This continuous stream of information is crucial for the AI to stay updated and make relevant decisions in a constantly changing environment.

2. Initial Processing & Interpretation: Making Sense of the Clutter

Once the AI has gathered all this information, it's usually a giant, messy pile of raw data. Imagine a super messy room full of toys, books, and clothes. Before you can play or read, you need to clean it up and put things in order, right? That's exactly what initial processing and interpretation do!

This aspect is all about cleaning, structuring, and analyzing this data to extract meaningful features, patterns, or signals relevant to the AI's goal or context (source: Gauthmath).

Let's break down these powerful words:

- Cleaning Data: Sometimes the data collected might have errors, be incomplete, or just be plain messy. Cleaning means fixing these problems. For example, if a temperature sensor accidentally sends a reading of “999 degrees,” the AI needs to know that's wrong and either fix it or ignore it. It's like wiping dirt off a window so you can see clearly.

- Structuring Data: Imagine you have a pile of LEGO bricks. They're just a pile until you start organizing them by color or size. Structuring data means putting it into an organized format that the AI can easily understand and work with. For example, turning a jumbled list of words into proper sentences, or organizing numbers into a table. This makes the data much easier for the AI to “read” and process.

- Analyzing Data: This is where the AI starts to become a detective! It looks closely at the cleaned and structured data to find important clues.

- Extracting Meaningful Features: This means picking out the really important bits. If the AI sees an image of a cat, the “features” might be its pointy ears, whiskers, and furry tail. The AI focuses on these key details, not every single pixel.

- Finding Patterns: Humans are great at finding patterns, and so are AIs! If a sensor keeps detecting the same vibration every hour, that's a pattern. If customers usually buy product A and product B together, that's a pattern. Recognizing these patterns helps the AI predict things or understand connections.

- Identifying Signals: A signal is a piece of information that tells the AI something specific. For example, a sudden drop in temperature could be a signal that rain is coming. A certain phrase in a customer's message could be a signal that they are unhappy.

All of this processing and interpretation is done with a specific purpose in mind: to make the data relevant to the AI's goal or context. If the AI's goal is to recommend movies, it won't care about the weather forecast. But if its goal is to plan an outdoor event, the weather is extremely relevant! The AI smartly sifts through everything, focusing only on what helps it achieve its mission. This is where the perception part transforms raw, chaotic information into clear, purposeful insights, ready for the next stage of the agentic loop. It's the critical step where noise becomes information, and mere facts begin to tell a story that the AI can understand.

3. Contextualization: Knowing What Matters, When

This next superpower is super clever! Contextualization means ensuring the input is situated within the current task or operational environment so that subsequent reasoning is grounded and accurate (source).

What does “context” mean? Imagine someone says, “It's cold.”

- If you're inside a warm house on a summer day, “it's cold” means something is wrong with the air conditioning.

- If you're hiking on a snowy mountain, “it's cold” just means it's normal winter weather!

The meaning of “it's cold” changes depending on the situation or context.

For an AI, contextualization is making sure it understands the situation it's in right now. It's not enough for the AI to just see or hear something; it needs to understand why that information is important at this particular moment.

- Situating input within the current task: If an agentic AI's job is to book a flight, then perceiving flight prices, dates, and destinations is important. But perceiving what someone is cooking in the kitchen across the street is probably not relevant. The AI focuses its “attention” on the information that directly relates to what it's trying to do. It understands its own mission and filters out distractions.

- Situating input within the operational environment: This means understanding the surroundings. For a self-driving car, knowing that it's on a highway means different rules apply than if it were in a school zone. The car needs to know if it's day or night, raining or sunny, in a city or on a quiet country road. These environmental details change how the AI should interpret what its sensors are telling it.

Why is this so important? Because it ensures that the AI's next steps – its “reasoning” and “actions” – are grounded and accurate. If an AI doesn't understand the context, it might make really silly or even dangerous decisions. It's like trying to play a board game without knowing the rules, or trying to solve a math problem without understanding what the question is asking.

By carefully considering the context, the perception part of the AI loop ensures that the information it has gathered and interpreted is truly useful and makes sense for the specific situation at hand. It's the AI's way of saying, “Okay, I see this information, but what does it mean for what I'm doing right now?” This deep level of understanding is what separates truly intelligent agents from simple, rule-following machines. It allows for a nuanced and adaptive response to a dynamic world, much like how humans constantly adjust their understanding based on their surroundings.

4. Technologies Involved: The AI's Amazing Toolkit

How do AIs perform all these incredible perception feats? They use a fantastic toolkit of special technologies! Just like a builder uses different tools for different jobs, an AI uses different technological “senses” to understand its world. Perception in agentic AI leverages several advanced techniques:

- Computer Vision (for images): This is the technology that gives computers “eyes”! Computer vision allows an AI to “see” and “understand” what's in pictures and videos. It can identify objects (like a dog, a car, a tree), faces, and even understand actions (like running or waving). For an agentic AI trying to navigate a room, computer vision helps it identify obstacles, doors, and even people. It's how AI interprets the visual signals that pour in from cameras, turning pixels into recognizable objects and scenes. This technology is behind facial recognition, self-driving cars seeing traffic signs, and robots inspecting products on a factory line.

- Natural Language Processing (NLP) (for text): If computer vision is the AI's eyes, then Natural Language Processing (NLP) is its ability to “read” and “understand” human languages. NLP allows an AI to make sense of written words, whether it's an email, a website, a document, or a conversation you're having with a chatbot. It can understand the meaning of sentences, identify important topics, and even figure out the sentiment (if someone is happy or sad). For an agentic AI helping with customer service, NLP is crucial for understanding what a customer is asking or complaining about. This powerful tool allows AI to comprehend and generate human language, making interactions much more natural and effective.

- Sensor Fusion (for combining multiple data streams): Imagine you're trying to figure out if it's raining. You might look out the window (sight), listen for rain hitting the roof (sound), and maybe even feel the humidity in the air (touch). You're combining information from different senses! Sensor fusion does the same thing for AI. It's a smart way to combine information from multiple different sensors or data sources to get a more complete and accurate picture of the environment (source: IBM on AI agent perception). For example, a self-driving car might combine data from its cameras (seeing a pedestrian), its radar (detecting an object's distance and speed), and its lidar (creating a 3D map of the surroundings). By combining these different “views,” the AI gets a much better and more reliable understanding than if it only used one sensor. It’s like having several expert opinions come together to form one robust conclusion.

- Optical Character Recognition (OCR) (for reading text from images): Have you ever taken a picture of a document and wished you could copy the text from it? That's what OCR does! It's a technology that allows an AI to “read” text that appears in images or scanned documents. So, if an agentic AI is designed to process invoices, OCR would be used to pull out the numbers, dates, and names from a scanned picture of an invoice. This is incredibly useful for turning physical or image-based information into digital text that the AI can then process with NLP. OCR bridges the gap between the visual and the textual, converting static images into dynamic, manipulable data.

- Other Domain-Specific Approaches: Besides these main ones, there are many other specialized tools and techniques for perception, depending on the AI's specific job. For example, an AI designed to diagnose medical conditions might use special algorithms to interpret X-rays or MRI scans. An AI managing a power grid might use specific methods to interpret electrical signals. The world of AI perception is always growing, with new tools being invented all the time to help AI understand more and more of our complex world.

These technologies are the backbone of the perception stage, transforming the raw sensory bombardment into coherent, structured, and meaningful data that the AI can then use to reason and act. Each technology provides a unique lens through which the AI can examine its environment, and when used together through techniques like sensor fusion, they create an incredibly rich and detailed understanding.

Perception in Action: How it Fits into the Agentic AI Loop

We've explored what perception is and how it works. Now, let's see how this incredible “sensing” superpower fits perfectly into the bigger picture of the agentic AI loop – that continuous perceive–reason–act cycle.

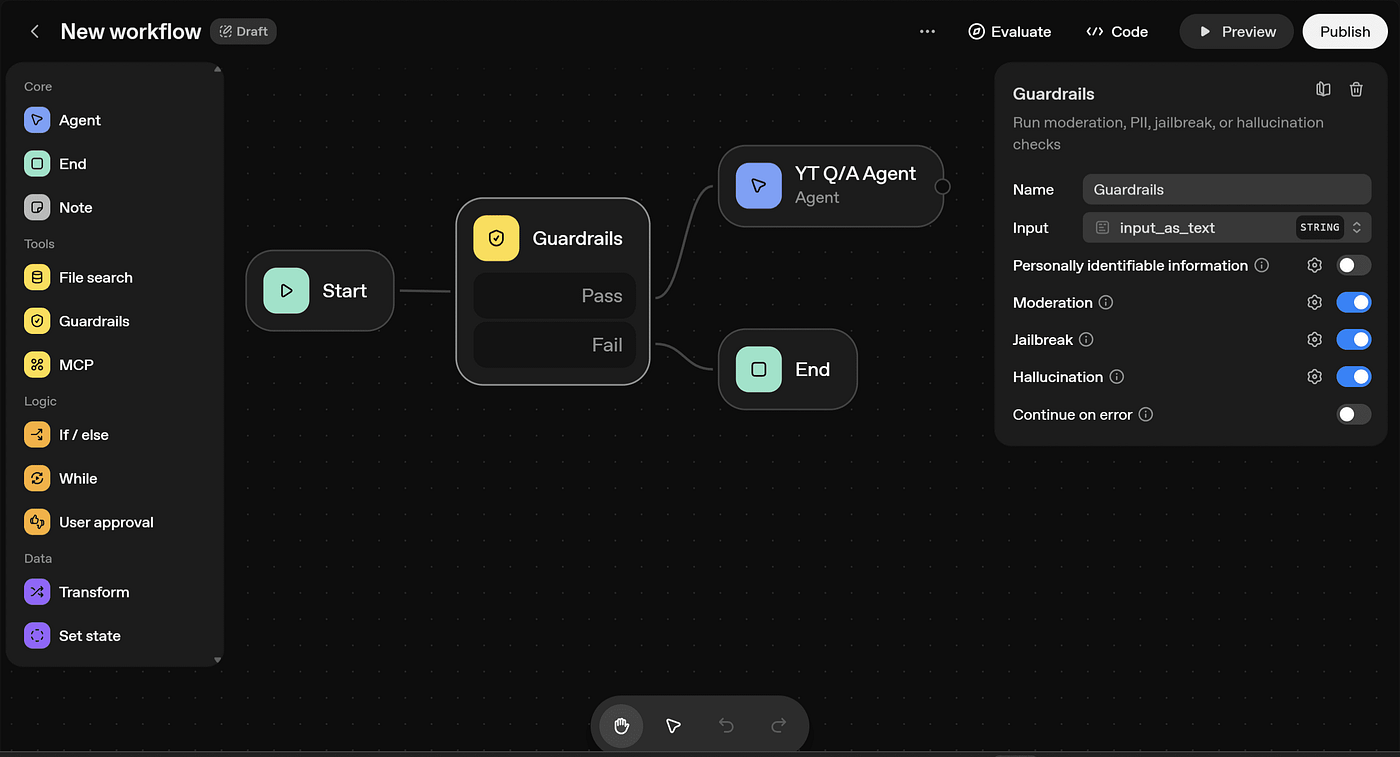

Perception isn't just one step among others; it's the indispensable first stage that converts environmental signals into information the agent can reason about and act upon[11][13]. Think of it as the starting gun for a race. Nothing else can happen until perception does its job.

Let's look at some real-world examples to see this in action:

In an Autonomous Vehicle (Self-Driving Car)

- Perception: Imagine a self-driving car cruising down the road. Its cameras are constantly scanning, its radar is detecting objects, and its lidar is building a 3D map. This perception stage involves identifying obstacles (like a parked car or a fallen tree branch), signage (like a stop sign or a speed limit sign), and pedestrian movement (someone stepping onto the sidewalk) through sensors. It's taking in all this visual and spatial data, cleaning it up, understanding what each object is, and where it is in relation to the car.

- Reasoning: Once the car perceives a stop sign and a pedestrian about to cross, its “reasoning” part kicks in. It thinks: “Okay, I see a stop sign, so I must stop. I also see a pedestrian, so I need to be extra careful and wait for them to cross safely.” It calculates the braking distance, the pedestrian's speed, and plans its action.

- Action: Finally, the “action” part of the loop makes the car slow down, activate its brakes, and come to a smooth stop before any navigation or control decisions are made. After stopping, its perception loop starts again, observing the pedestrian crossing, checking for other traffic, and confirming the stop sign. Only when it perceives it's safe to proceed will it reason and act to move forward.

In Business Process Automation (Smart Office Helper)

- Perception: Imagine an agentic AI designed to help a busy office. Its perception task might involve watching an email inbox for new messages, scanning uploaded documents, or monitoring activity in a customer relationship management (CRM) system. Here, perception might capture and understand structured and unstructured data from APIs or documents to enable downstream decision-making. For instance, it might use OCR to read a new invoice that just arrived as a PDF, or use NLP to understand the subject line of an urgent customer email. It extracts key information like the customer's name, the problem they're facing, or the amount due on an invoice.

- Reasoning: Once the AI perceives an urgent email about a customer issue, its reasoning module might think: “This is an urgent request from a high-priority customer. I need to find the relevant information about their account and escalate this to the right department.”

- Action: The “action” could be automatically creating a ticket in the support system, drafting a response email to the customer, or sending an alert to a human manager. The loop continues as the AI perceives the new status of the ticket or the manager's response.

In both these examples, you can clearly see that without the incredibly important perception stage, the AI wouldn't even know where to begin! It wouldn't know there was a stop sign, a pedestrian, an urgent email, or an invoice. Perception is the AI's essential bridge to the physical and digital world, providing all the necessary information for it to become truly intelligent and useful. It's the critical link that empowers AI to move from being a passive program to an active, informed, and truly capable agent.

Why Perception is the Heartbeat of Agentic AI: The Foundation of Understanding

So, what have we learned about this astonishing part of agentic AI? In summary, the perception stage's primary role is to enable AI to “understand its environment” by transforming ambient inputs into knowledge, forming the foundation for all subsequent reasoning and action within the agentic AI loop (source[13]).

Think of it this way: for an AI to be truly smart and helpful, it needs to be aware of what's going on around it. Just like you need to see, hear, and feel to understand your surroundings, an AI needs its own set of “senses” to gather information. The perception part of the loop is precisely these senses. It's not just about collecting raw data; it's about meticulously processing, interpreting, and contextualizing that data, refining it into meaningful knowledge. This knowledge is the bedrock upon which all intelligence is built. Without this careful transformation, raw data remains just noise, unusable for any intelligent decision-making.

Consider the immense complexity of our world – endless sights, sounds, texts, and digital signals. The perception stage is the AI's masterful skill in sifting through this constant stream, identifying what's important, and preparing it for deep thought. It's the difference between a simple computer program that follows a fixed script and a truly “agentic” AI that can adapt, learn, and respond intelligently to new situations.

The implications of poor perception are huge, even frightening. Imagine our self-driving car example again. If its perception system fails to detect a pedestrian, or misinterprets a stop sign, the consequences could be catastrophic. If a business automation AI misreads an important detail on an invoice, it could lead to financial errors. This highlights a critical truth: Without accurate perception, agentic AI cannot operate autonomously or adaptively in dynamic settings[13].

Accuracy in perception is not just a nice-to-have; it's absolutely essential. It directly impacts the safety, reliability, and effectiveness of any agentic AI system. A slight misinterpretation or a missed piece of data can lead to wrong decisions, inefficient actions, or even dangerous outcomes. That's why the brilliant minds working on AI are constantly striving to make perception systems more robust, more accurate, and more capable of understanding the nuances of the real world. They are building AI “eyes” and “ears” that can rival, and in some cases even surpass, our own human capabilities in speed and detail.

The Thrilling Future of AI Perception

As we look ahead, the future of AI perception is incredibly thrilling! We're talking about AIs that can:

- Understand emotions: Imagine an AI that doesn't just understand the words you type, but also senses if you're feeling frustrated or happy from your tone of voice or facial expressions.

- Anticipate events: AIs that can perceive subtle changes in patterns and predict what might happen next, like predicting a machine breakdown before it occurs, or even forecasting trends in the economy with astonishing accuracy.

- Learn from new experiences: A perception system that continuously improves its understanding the more it interacts with the world, much like a child learning new things every day.

- Perceive abstract concepts: Moving beyond just seeing objects, to understanding complex ideas, relationships, and even subtle social cues.

The advancements in computer vision, natural language processing, and sensor technologies are happening at a breathtaking pace. We are rapidly approaching a future where agentic AIs, powered by incredibly sophisticated perception systems, will become indispensable partners in almost every aspect of our lives – from enhancing healthcare and optimizing our cities to revolutionizing how we learn and work.

The ability to accurately and comprehensively perceive the world is the critical bottleneck, and the most exciting frontier, in making AI truly intelligent. The better AI can perceive, the better it can reason, and the better it can act. This core function is the gateway to unleashing the full, transformative power of artificial intelligence.

Conclusion: The Unseen Hero of Intelligent AI

Today, we've embarked on an incredible journey into the brain of an agentic AI, specifically uncovering the mystery behind what is the primary function of the perception part of an agentic AI loop? We discovered that perception is the AI's vital set of super-senses, its ability to sense, collect, and interpret data from its environment, transforming a whirlwind of raw information into usable knowledge.

From gathering real-time data through cameras, microphones, and APIs, to expertly cleaning, structuring, and analyzing that data, and then making sure it all makes sense within the correct context – the perception stage is a marvel of modern technology. Technologies like computer vision and natural language processing are the powerful tools that make this possible.

Without this incredible foundation of accurate perception, agentic AI would be blind and deaf to the world, unable to reason or act autonomously. It is the indispensable first step, the heartbeat, and the very window through which AI can finally “understand” its environment. As these perception systems grow even more sophisticated, we stand on the brink of an exhilarating future where AI will not just perform tasks, but truly comprehend and adapt to our dynamic world, making intelligent decisions that will shape tomorrow. The adventure of AI has just begun, and its ability to perceive is leading the way!

Frequently Asked Questions

- What is an Agentic AI Loop?

An Agentic AI Loop is a continuous cycle, often called “Perceive-Reason-Act,” where an AI system takes in information from its environment (Perceive), processes that information to make decisions (Reason), and then performs actions based on those decisions (Act). This loop allows AI to operate autonomously towards a goal.

- What are the main components of the perception part of an Agentic AI Loop?

The perception part primarily involves three key aspects: Data Gathering (acquiring information from various sources like sensors, APIs, databases), Initial Processing & Interpretation (cleaning, structuring, and analyzing data to find meaningful patterns), and Contextualization (situating the input within the current task and environment for accurate reasoning).

- What technologies enable AI perception?

Several advanced technologies enable AI perception, including Computer Vision (for understanding images and videos), Natural Language Processing (NLP) for text and speech, Sensor Fusion (combining data from multiple sensors for a comprehensive view), and Optical Character Recognition (OCR) for reading text from images.

- Why is accurate perception crucial for Agentic AI?

Accurate perception is the foundation of an Agentic AI's ability to “understand its environment.” Without it, the AI cannot correctly interpret what's happening, leading to flawed reasoning, ineffective actions, or even dangerous outcomes. It's essential for autonomous and adaptive operation in dynamic settings.

- Can AI perceive emotions in the future?

Yes, the future of AI perception is expected to include understanding emotions. Advancements in areas like natural language processing and computer vision are moving towards AIs that can sense emotions from tone of voice, facial expressions, and textual cues, leading to more nuanced and human-like interactions.

“`